Konstantin Simonchik, Co-founder and CSO at ID R&D

Facial deepfakes, fake identity generation, and voice cloning are the most concerning issues caused by the rapid advancements in Generative Artificial Intelligence (AI). While generative models offer numerous benefits across various industries, they also pose significant threats, especially for identity verification in the realm of Know Your Customer (KYC) platforms. This article introduces some of the frequent malicious uses of generative AI in the context of KYC and offers insights into how platforms can safeguard against these threats.

What is Generative AI?

Generative AI refers to artificial intelligence systems that can autonomously create new, original high-quality content like text, images, audio, and video based on given input data and parameters.

Potential Threats to KYC Platforms

Know Your Customer (KYC) platforms play a pivotal role in the financial sector, ensuring that businesses understand the identity and risk associated with their clients. As AI continues to evolve, so do the challenges faced by KYC platforms. One of the emerging threats is the malicious use of generative AI models.

1. Facial Deepfakes

Recent advancements in GANs (Generative Adversarial Networks) have enabled the creation of highly realistic fake faces, commonly referred to as deepfakes. Tools like StyleGAN can generate artificial but photorealistic portraits simply by tuning parameters in the latent space. At the same time, these tools create new non-existent individuals, other techniques like DeepFaceLab leverage autoencoders to swap faces in images and videos. This face-swapping technology can render a target face onto images, videos, or even live streams. A notable instance involved a deepfake impersonating a chief operating officer from Binance, a leading crypto exchange, during a Zoom call.

Figure. A deepfake generated face from thispersondoesnotexist.com

In the realm of identity verification, facial deepfakes amplify the risks of identity fraud and counterfeit liveness verification. Fraudsters might:

- Submit a deepfaked selfie during onboarding that aligns with the photo on their ID.

- Use face-swapping to overlay their face on video clips of a genuine user executing liveness actions such as blinking or turning, potentially deceiving many active liveness detection systems.

- Modify a stolen image or video of the authentic user with neural rendering techniques to change facial expressions and stances. Such simulated imagery and videos can bypass active presentation attack detection methods as well.

For identity verification in KYC platforms, it’s crucial to recognize that regardless of the advanced deepfake technique employed, fraudsters must introduce the manipulated image into the system. Being a digital entity, the deepfake must be printed, displayed, or injected into the KYC capturing tool, leaving traces on the image or operating system indicating manipulation. Consequently, a two-level protective approach is advisable:

Level 1. Content artifact detection – the classic deepfake detector that could utilize texture analysis, AI, etc., with the aim of detecting deepfake artifacts on the image.

Level 2. Channel artifact detection – presentation and injection attack detection that identifies software traces and artifacts evident on the image when processed through virtual cameras, hardware capturers, or OS emulators.

For more information about 2-Level Deepfake detection visit IDLive Face Plus.

2. Fake Identity Generation

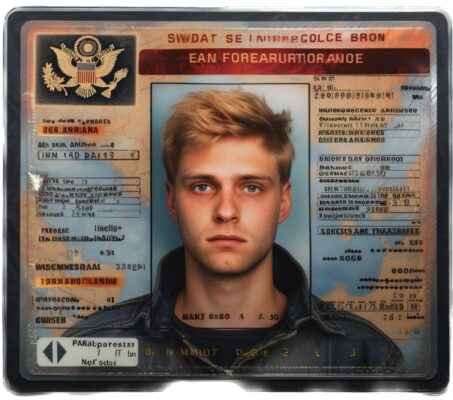

Generative AI has the potential to produce lifelike images, leading to the risk of fabricating fake IDs or passports. However, the present iteration of Generative AI falls short of creating a complete counterfeit document from scratch. For instance, even the cutting-edge Midjourney engine, when prompted with “/imagine US driver license” or similar requests, produces an image that scarcely resembles an actual driver’s license (see picture).

Figure. A Gen AI-generated image of an identity document

The current limitations of generative AI include accurately rendering text and intricate structured visual data, such as the standardized elements found in IDs. Nevertheless, there exists a suite of diverse engines tailored for different components of counterfeit documents:

- Face generation tools (e.g., Open AI’s DALL-E 2, Stability AI’s Stable Diffusion, Midjourney)

- Fake PII generators (e.g., the Python tool named Faker)

- Document templates accessible on the DarkNet

- Professional image editors (e.g. Adobe Photoshop) – versatile tools that can be used for amalgamating various counterfeit components (like portraits, text, and templates) and refining them cohesively.

In the foreseeable future, we can anticipate the emergence of tools on the DarkNet that streamline and automate these processes. This underscores the urgency for developing counteractive measures that can detect not just deepfake-generated portraits on IDs but any form of digital tampering on the entire ID image. Moreover, even if fraudsters possess a digitally altered counterfeit document, they would still need to physically print it for KYC verification. At this juncture, presentation attack detection remains crucial. Producing a high-caliber document on premium material remains a hurdle that Generative AI’s advancement alone cannot surmount.

To learn more about the product that offer protection against documents forged with AI-generated or replaced portraits, digital manipulations, printing/copying, and even screen replay, visit IDLive Doc.

3. Synthetic Voice Phishing

Voice cloning technologies have seen significant advancements, resulting in incredibly authentic voice synthesis. In 2022, voice cloning was the most frequently reported type of fraud, and it generated the second-biggest losses for victims. In 2023, Joseph Cox, a British journalist for Vice, was able to use voice cloning software to bypass the security measures of Lloyds Bank in England. Using a synthetic clone of his own voice, Cox was able to get a replica of his voice verified by Lloyds’ voice recognition software. He was then able to access his account information, including recent transactions and balance details.

Services, such as Professional Voice Cloning from ElevenLabs, can emulate the intricacies of an individual’s voice with just a handful of audio samples. Malicious actors might employ voice cloning to mimic clients during KYC verification calls. Using synthesized voices from publicly accessible audio clips, they can potentially deceive voice recognition systems. During client onboarding and authentication, these voice deepfakes could be used to give false answers to security queries or initiate unauthorized actions.

Additionally, user-friendly applications and APIs have made voice synthesis systems more accessible. Platforms like Resemble AI, Voicemod, and Modulate enable straightforward voice cloning. As entry barriers diminish, the threat of voice deepfakes intensifies.

To fortify against such voice-driven manipulations, KYC platforms must incorporate voice liveness detection that can identify both replay and modern deepfake voice attacks. The most recent ASVSpoof challenge in 2021 showed that voice synthesis can be detected with up to 99% accuracy. However, the accuracy diminishes to 85% when the voice undergoes lossy codec processing. To effectively guard against voice deepfakes, the following strategies are recommended:

- Implementing on-device solutions where voice is captured at a 16kHz sampling rate

- Transmitting the voice to servers in a lossless 16kHz format

Conversely, detecting voice deepfakes in call centers, where voice is captured at an 8kHz rate and transmitted in a compressed format through PSTN, presents a formidable challenge. While passive techniques applied to natural voice dialogues offer some protection, the increasing capability of voice synthesis engines to generate real-time responses may reduce the effectiveness of active detection methods, such as challenge questions.

IDLive Voice Clone Detection is a product designed to identify synthetic/deepfake voices, and also replay, hardware- and software-based attacks.